The visual presentation of the work can be seen at http://www.sztaki.hu/~kopacsi/vr/vrfm.htm using Microsoft Internet Explorer fulfilling some technical requirements...

In the age of globalisation it is more and more the case that users or analysts of a simulation system are working in different location. This distant work requires remote applications of virtual reality based simulation. Today the infrastructure of Internet provides the hardware and software basis for establishing the communication between users and the remote applications, but using the best tools and methods are still a strong field of research.

The first step in immersion is to provide the spatial relationship between the user and the environment. This may be achieved through the use of location and orientation tracking devices. Tracking the userís head allows the computer to generate correct images for the viewer. Tracking other parts of the body allows further interaction of the user with her environment.

The typical VR display is a wall-projection display that uses either a large computer screen or a rear projection display. These systems can provide a good sense of depth but the feeling of immersion is very low. They are relatively inexpensive and non-intrusive. A greater impression of immersion may be achieved through head-mounted or helmet-mounted displays (HMD), which consist of a pair of LCD or CRT display devices, mounted on a helmet worn by the user. In some HMDs there is only one display that is connected to a system of lenses and mirrors that conveys the image into the retina of the eyes.

The planning, design and operating of FMS are a very complex tasks, because of the high value of the system and of the produced goods, due to the complexity of the control algorithms, and the integrated structure of the controlling components of the system, and the large amounts of real-time data on machine and product status.

For applying VR technology in manufacturing systems new type of interfaces should be applied as the evolution of the man-machine interface from the traditional devices (computer screen, keyboard, mouse) to the more sophisticated tools, like head-mounted display, data gloves, simulator joysticks, etc.

For the above reasons manufacturing appears to be a major beneficiary of Virtual Reality technology and of its evolving capabilities in many ways. It can considerably improve design and production processes and introduce new approaches to the style of computer controlled manufacturing systems. In the following three important fields of the FMS life cycle, i.e. factory layout design, process planning, and control of FMS Virtual Reality could be applied as follows.

The VR environment would enable people to mimic the motions of the manufacturing staff and evaluate the layout for ergonomics, reach, access, etc. Furthermore, modifications to existing, functioning manufacturing facilities could be planned and tested without interrupting the ongoing manufacturing process.

Loading and unloading parts, operating the machine, executing the NC/CNC program, and other operations can be verified and observed before the machining instructions are installed in the FMS controllers. Machining time and motion studies can be performed, allowing assessing the tools for wear and efficiency of the tool selection algorithms.

When the VR technology is integrated with high-bandwidth network channels, those environments can offer tele-presence features [6], which support experts to remotely share the tasks of a local operator. These situations are of very high value, for trained experts can virtually be present at any spot around the globe. The tele-presence feature can help in tracking real manufacturing processes, where the system and the observer are in different locations. Tele-presence can be extended to tele-operation, when the system is not only observed, but controlled from a remote location. In both cases VR technology can be useful when it is impossible to establish an appropriate feedback from the remote site to the observer or controller. In these situations the real system should be modelled and often visualised, so that the remote observer or controller gets information not only from the real operation, but also from the model that integrates real and simulated data.

Regarding technology aspects, FMS environments are dangerous machine structures, and operators are not allowed to roam around freely in the cells. For example Automated Guided Vehicles (AGV) or robotic cells ought not to operate, when humans are present in their working environment. The VR technology could allow the operators to run the FMS, and only be virtually present at hazardous sites. Such applications suggest very sophisticated, real-time multi-media database tools, as well.

An UNIMATE PUMA 760 assembly robot can be found in the assembly cell, which can serve two pallets at the same time. In the measuring cell an OPTON UMC 850 measuring unit operates. Our VR system concentrates on the simulation of the manufacturing and the assembly cell of this plant.

The PC Glasstron is a unique head mounted display that creates a high resolution, virtual 30" image when connected to the computer (or video source). With built-in ear buds for stereo sound it has full multimedia capability. Its internal dual LCD panels create an impressive, large screen picture. This tool has been used successfully for virtual round table scenarios at GMD [7].

For navigation we have chosen a flight simulator joystick by QuickShot (see Fig.3.) with which the user can drive a virtual helicopter that flies around the simulated objects.

Creating Virtual Reality environment for manufacturing applications requires powerful simulation and animation software tools, as well. Taylor ED (Enterprise Dynamics) is a family of software products to let you model, simulate, visualise and control business and real-life processes [8]. It offers the necessary features to build complicated models and animations of manufacturing systems, as well. Due to the open structure of the program, the number of applications is unlimited. Different suppliers will continue to publish new libraries of atoms (the building blocks in ED) to be used with Taylor ED. One or more 2D and 3D views may be relatively easily defined. Each view may have a different viewpoint, camera position and camera angle. Taylor ED is an effective simulation tool, but the quality of its 3D images is not satisfactory for our purposes.

For this reason we decided to use a VRML tool for visualisation controlled by Taylor ED, which provides flexibility and generality. Several tools exist to convert almost any CAD format to VRML, enabling geometric models to be easily imported from whatever format they already exist without having to redraw these. VRML 3D browsers can be installed as free plug-ins within common free 2D browsers, such as Netscape or Internet Explorer. Furthermore, as VRML can exchange messages with external programs written in Java, scenes can be enhanced with Javaís powerful computational and network facilities. For instance, position data can be received via sockets either from the web or from other programs on the local computer, interpreted and treated in Java and then finally routed to the VRML scene.

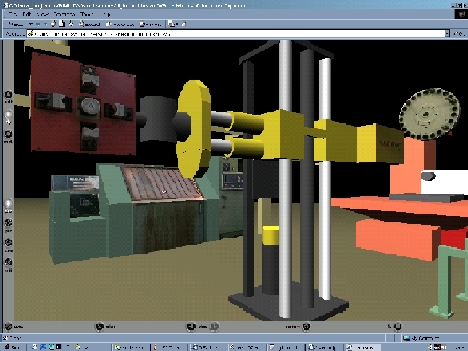

We have created the VRML images of the simulated pilot plant using 3D Studio MAX, extended with real JPG images. The JPG images have been taken by a Panasonic digital video camera, and were imported as textures to make the appearance of the simulation objects more realistic. We used Cortona VRML plug-in in our project because it is small, fast and rich in important features as follow. VRML objects can be embedded in HTML pages and can be viewed full screen, as well. Cortona supports input devices (e.g. joystick) connected to the game port, as well as real 3D views which are available with special hardware elements (appropriate VGA card with glasses and a monitor with fast refresh rate).

Since our VRML scene should work in a remote controlled way, we had to choose one from the several possibilities that the VRML standard offers to program the virtual world. For technical reasons the External Authoring Interface (EAI) has been chosen. With the EAI a Java applet can get reference to the objects of the VRML scene and modify their attributes to generate the necessary changes in the appearance of the virtual world.

In the first version of the system TCP/IP communication protocol was used, but the speed of the communication was critical in some cases. In the present version we applied User Datagram Protocol (UDP), that allows much faster communication over the Internet, since it contains very little flow control, unlike TCP. The price of the heavily reduced communication overhead is that the data packages occasionally can be lost when UDP is used. When simulations are run on reliable networks or on single hosts, this is rarely a problem. But when simulations are run over the Internet, package loss may happen and the protocol using UDP (in this case DIS) must tolerate this. This concept has been applied in the EURODOCKER project as well [9].

Figure 4: Physical Structure of the System

The Simulator itself has been realised in 4Dscript that is the programming language of Taylor ED. It gives the possibility to establish network connections via sockets, so every atom that has to be visualised can send their state variables through the socket connection. If the state of an atom (position, orientation, colour, etc.) changes, then the atom sends this new information towards a Java application, called Transmitter. During continuous state changing (e.g.: a moving AGV) an event is generated periodically in the atom to send the state variables, which are needed to generate the animation of the objectís movement.

The Transmitter is a Java application that receives the objectís updated state information from the simulation in Taylor ED and sends it toward the Receiver, that is an applet controlling the scene in the VR Browser. The work of the Transmitter starts with a main thread. After some initialisation the main thread stops, waiting for the Simulator to be connected. Accepting the connection request of the simulation, a new thread is created called ServeTED to receive and store the arriving information from the Simulator. After creating ServeTED the main thread blocks again, waiting for the applets to make their requests. As soon as an applet wants to connect, a new thread is created to serve it (ServeApplet), and the main thread waits for another appletís request. A thread, called ServeApplet begins to run when its applet makes a request for new data, and sends the state variables all of the objects which has been modified since the last request of the applet.

There are two main reasons that justify the use of this middle tier application in the chain of the communication. First of all, it gives more control in the task of serving multiple clients as the 4Dscript could give, and it enables the usage of different socket types (datagaram instead of stream, see later), if we have to change it in the further development of this project. Another benefit is that the Transmitter and the Web Server (which hosts the visualising HTML page) could run on the same machine, and in this case, we donít have to use signed applet. The applets are allowed opening network connection towards the machine from which they have been downloaded without any special security permission.

There are restrictions applied to the applets for security reasons. For example they can not read or write files on the client computer or can not open a socket towards a different computer from they have been downloaded. Although by signing an applet these restrictions can be lifted. To sign an applet a digital certificate is needed from a Certificate Authority (CA). With the help of this certificate the applets can be signed. A signed applet displays a message when the browser downloads it to inform the user who the signer of the applet is, and from which CA originates the certificate. If the user trusts both the signer and the CA he or she will choose to launch the applet.

The user can immerse in the 3D animation (see Fig.5.)

through a VRML page available on the Internet (http://www.sztaki.hu/~kopacsi/vr/vrfm.htm

)

using using Microsoft Internet Explorer fulfilling some technical requirements.... The virtual world appears either in the head-mounted

display or in a graphical display. The user can navigate in the system

like she or he were sitting on a helicopter using the flight simulator

joystick. It is possible to go close and far, up and down, right and left

to the objects. The user can turn, and go around as she or he really was

in the system.

After the HTML page has been downloaded, the user can examine the virtual manufacturing cell in the VRML browser, and the applet offers the possibility to connect the scene to the simulation.

The applet, like the Transmitter is multi threaded, too. While one thread is dealing with the communication and updating the VRML scene, the other monitoring the userís interactions. It is planned to enable the user to interact with the simulation either through the VRML or the user interface of the applet. It is essential to let the user at least to tune the simulation speed, stop or reset it.

| [1] | Figueiredo, M., Böhm, K., Teixeira, J.: Advanced Interaction Techniques in Virtual Environments. In: Computers & Graphics, Journal, Vol. 17. No. 6., Pergamon Press Ltd, 1993, pp. 655-661. |

| [2] | Chuter, C., Jernigan, S., Barber K.: A Virtual Environment Simulator For Reactive Manufacturing Schedules, Proc. of Symposium on Virtual Reality in Manufacturing, Research and Education, UIC The University of Illinois at Chicago, October 7-8, 1996. |

| [3] | Ránky P.: Components and Planning of Flexible Manufacturing Systems, Introduction to CIM Systems, Industrial Information Centre, Budapest, 1985. (in Hungarian) |

| [4] | A.S. Carrie, E. Adhami, A. Stephens, I.C. Murdoch: Introducing a flexible manufacturing system. In: Int. J. of Prod. Research, Vol.22, No.6, 1984, pp.907-916. |

| [5] | F.Erdélyi, T. Tóth, F.Rayegani: Real-time Control of Manufacturing Systems on the Base of Multi-Layered Model. In: Proceedings of the Second Mexican-Hungarian Workshop on Factory Automation and Material Sciences, Mexico 1999. pp.37-50. |

| [6] | G.Haidegger, S.Kopácsi: Telepresence with Remote Vision in Robotic Applications. In: Proceedings of the 5th International Workshop on Robotics in Alpe-Adria-Danube Region RAAD 96 Volume 1. pp.557-562. |

| [7] | W. Broll: Augmenting the Common Working Environment by Virtual Objects,ERCIM NEWS, No.44. January 2001., pp.42-43. |

| [8] | Taylor Enterprise Dynamics, User Manual, F&H Simulation B.V., 2000. |

| [9] | http://www.iau.dtu.dk/~tdl/task52ab.html |

| [10] | Bejczy, A. K.: Virtual reality in manufacturing. In: Re-engineering for Sustainable Industrial Production, Proceedings of the OE/IFIP/IEEE Int. Conf. on Integrated and Sustainable Industrial Production Lisbon, Portugal, May 1997, pp.48-60. |

| [11] | I.Mezgár,-G.L.Kovács: Co-ordination of SME production through a co-operative network, In: Journal of Intelligent Manufacturing (1998) 9. pp. 167-172 |

|

Copyright © 2001

Sándor Kopácsi kopacsi@sztaki.hu |

|

Research Project

in MTA SzTAKI at CIM Laboratory |